Football Game Prediction Project

Football games prediction project

Authors: Kaixin Wang & Qin Hu

Time: Summer Session A 2019

Introduction

In this regression competition project, the goal is to predict the numeric variable Wins from the Football dataset, which represents the number of football games that a team won during a season of 16 games.

Method and Model

Regression model: The regression model that we decide to adopt is a Multiple Lienar Regression (MLR) model:

Reasons in the adopting MLR model:

- Since the total number of predictors in the dataset is p = 31, which is relatively small and the total number of observations is n = 380, our first choice was to fit a MLR model.

- Since MLR has higher interpretability than many other statistical learning methods, and since we are familiar with the predictors and the response to some degree, using a MLR model will help us in the process of variable selection and evaluating the model.

- Some of the statistical learning methods (such as boosting and random forest) tend to overly fit the training set, and thus they don’t have a high prediction accuracy on the testing sets.

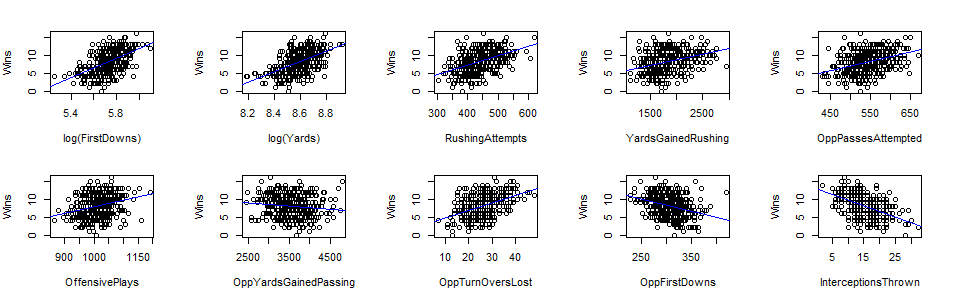

Variable selection: Based on the pairwise scatterplots based on

plot(Wins ~ predictors), we observe that there are several predictors that have a strong linear relationship with the response variable, for example:

Scatterplots of Wins vs. different predictor variables

Steps in the variable selection process:

Based on the fact that a random forest classifier picks $\frac{p}{3}$ predictors by default, where p = total number of predictors = 31 in the dataset, and the fact that there is diminishing amount of increase Radj when adding more than 10 predictors, we chose to include 10 predictor variables.

Transformation of variables: we transformed

YardsandFirstDownsby applying the logarithmic function to bring down their scale, while keeping as much information of the variables as possible. The reason is because these two predictors are very important in predicting the response in the training set based on their high correlation with the response.By looking at the pairwise scatterplots and correlation matrix between the response and the predictors in the training set, and by comparing the Radj and training RSS using different MLR models with 10 predictor variables, the model we selected is as the following:

Wins ~ log(FirstDowns) + RushingAttempts + log(Yards) + YardsGainedRushing + OppPassesAttempted + OffensivePlays + OppYardsGainedPassing + OppTurnOversLost + OppFirstDowns + InterceptionsThrown

| Estimate | Std. Error | t value | Pr(>|t|) | |

|---|---|---|---|---|

| (Intercept) | -101 | 8.763 | -11.52 | 1.906e-26 |

| log(FirstDowns) | 9.539 | 1.919 | 4.971 | 1.022e-06 |

| RushingAttempts | 0.02328 | 0.003784 | 6.153 | 1.981e-09 |

| log(Yards) | 8.307 | 1.919 | 4.329 | 1.929e-05 |

| YardsGainedRushing | -0.0028 | 0.0005276 | -5.307 | 1.927e-07 |

| OppPassesAttempted | 0.02342 | 0.00264 | 8.871 | 3.167e-17 |

| OffensivePlays | -0.02257 | 0.002841 | -7.947 | 2.347e-14 |

| OppYardsGainedPassing | -0.001624 | 0.000352 | -4.615 | 5.44e-06 |

| OppTurnOversLost | 0.1069 | 0.01355 | 7.89 | 3.471e-14 |

| OppFirstDowns | -0.02205 | 0.004662 | -4.73 | 3.201e-06 |

| InterceptionsThrown | -0.1299 | 0.01882 | -6.902 | 2.249e-11 |

| Observations | Residual Std. Error | R2 | Adjusted R2 |

|---|---|---|---|

| 380 | 1.552 | 0.7616 | 0.7552 |

Assumptions, Diagnostics and Results

Assumptions

In the MLR model, yi = β0 + β1xi1 + ⋅ ⋅ ⋅ + βpxip + ϵi, we assume that ϵi ∼ N(0, σ2) are independently distributed.

Diagnostics

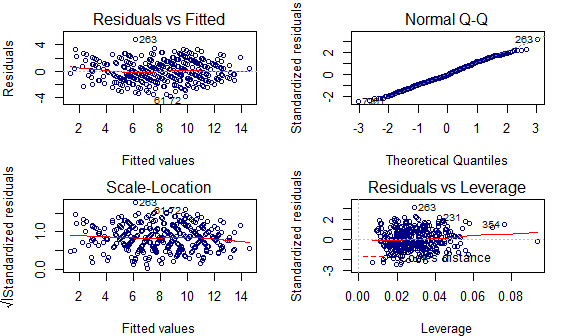

Diagnostics of the MLR model

- From the residuals vs. fitted values plot, the errors are independently distributed and E[ϵ] = 0 (no pattern exists in the plot and the average of errors is around zero throughout).

- From the standardized residuals vs. fitted values plot, the constant variance assumption is satisfied (flat line representing the constant value of the variance).

- From the qq-plot, the normality assumption of error terms is satisfied (points follow the 45-degree straight line passing through the origin).

Conclusion and Discussion

Comparision between summary statistics of the training set and testing set predictions:

summary table of the training set predictions Min. 1st Qu. Median Mean 3rd Qu. Max. 1.352 6.123 8.26 8.139 10.3 14.54 summary table of the testing set predictions Min. 1st Qu. Median Mean 3rd Qu. Max. 0.7344 5.922 7.709 7.544 9.157 13.45 Based on the two summary tables above, we observe that the predictions on the testing set in general are lower than the predictions on the training set.

Interpretations of the model:

(1) Interpretations of the intercept:

Although the intercept (β0 = −101) is statistically significant, it doesn’t make much sense in the real world: a team with no statistics collected or no games played during a season shouldn’t have won a negative number of games, but rather the number should be around zero.

(2) Interpretations of the slopes:

- Predictor variables that increase the value of the response variable are

log(FirstDowns),log(Yards),RushingAttempts,OppPassesAttempted, andOppTurnOversLost.

Based on the context, it is reasonable for variables such as log(FirstDowns) and log(Yards) to have an effect of increasing the response variable, since it is generally the case that the higher these numbers are, the better performance a team might have during a game.

- Predictor variables that decrease the value of the response variable are

YardsGainedRushing,OppYardsGainedPassing,OffensivePlays,OppFirstDownsandInterceptionsThrown.

Similarly, it also makes sense that predictors such as OppYardsGainedPassing and OppFirstDowns to have a negative effect on the value of the response, since these numbers represent the performance level of the opponent in a game.

(3) Possible improvements of the model:

Due to the time limit, we only transformed two predictor variables by taking their logarithmic values. Further variable transformations should be taken into considertaion, such as taking the ratio of predictor variables to include more information into the model, while keeping the total number of predictors employed in the model small. For example, one possible new variable could be defined as $\frac{\text{FirstDowns}}{\text{OppFirstDowns}}$, which represents the number of first downs of the team relavant to the number of first downs of the opponent.